AI

December 17, 2024

Of the many lawsuits media giants have filed against AI companies for copyright infringement, the one filed by Dow Jones & Co. (publisher of the Wall Street Journal) and NYP Holdings Inc. (publisher of the New York Post) against Perplexity AI adds a new wrinkle.

Perplexity is a natural-language search engine that generates answers to user questions by scraping information from sources across the web, synthesizing the data and presenting it in an easily-digestible chatbot interface. Its makers call it an “answer engine” because it’s meant to function like a mix of Wikipedia and ChatGPT. The plaintiffs, however, call it a thief that is violating Internet norms to take their content without compensation.

To me, this represents a particularly stark example of the problems with how AI platforms are operating vis-a-vis copyrighted materials, and one well worth analyzing.

According to its website, Perplexity pulls information “from the Internet the moment you ask a question, so information is always up-to-date.” Its AI seems to work by combining a large language model (LLM) with retrieval-augmented generation (RAG — oh, the acronyms!). As this is a blog about the law, not computer science, I won’t get too deep into this but Perplexity uses AI to improve a user’s question and then searches the web for up-to-date info, which it synthesizes into a seemingly clear, concise and authoritative answer. Perplexity’s business model appears to be that people will gather information through Perplexity (paying for upgraded “Pro” access) instead of doing a traditional web search that returns links the user then follows to the primary sources of the information (which is one way those media sources generate subscriptions and ad views).

Part of this requires Perplexity to scrape the websites of news outlets and other sources. Web scraping is an automated method to quickly extract large amounts of data from websites, using bots to find requested information by analyzing the HTML content of web pages, locating and extracting the desired data and then aggregating it into a structured format (like a spreadsheet or database) specified by the user. The data acquired this way can then be repurposed as the party doing the gathering sees fit. Is this copyright infringement? Probably, because copyright infringement is when you copy copyrighted material without permission.

To make matters worse, at least according to Dow Jones and NYP Holdings, Perplexity seems to have ignored the Robots Exclusion Protocol. This is a tool that, among other things, instructs scraping bots not to copy copyrighted materials. However, despite the fact that these media outlets deploy this protocol, Perplexity spits out verbatim copies of some of the Plaintiff’s articles and other materials.

Of course, Perplexity has a defense, of sorts. Its CEO accuses the Plaintiffs and other media companies of being incredibly short sighted, and wishing for a world in which AI didn’t exist. Perplexity says that media companies should work with, not against, AI companies to develop shared platforms. It’s not entirely clear what financial incentives Perplexity has or will offer to these and other content creators.

Moreover, it seems like Perplexity is the one that is incredibly shortsighted. The whole premise of copyright law is that if people are economically rewarded they will create new, useful and insightful (or at least, entertaining) materials. If Perplexity had its way, these creators would not be paid at all or accept whatever it is that Perplexity deigns to offer. Presumably, this would not end well for the content creators and there would be no more reliable, up-to-date information to scrape. Moreover, Perplexity’s self-righteous claim that media companies just want to go back to the Stone Age (i.e., the 20th century) seems premised on a desire for a world in which the law allows anyone who wants copyrighted material to just take it without paying for it. And that’s not how the world works — at least for now.

November 19, 2024

If you’ve been following this blog, you’re familiar with the copyright infringement cases the New York Times and the Authors Guild have brought against OpenAI, makers of ChatGPT. So familiar, in fact, I won’t summarize these suits again. You can find a prior post about these cases here. The current dispute is interesting, at least to me (social media + law = fun for a nerd like me!) because it is another data point on how courts grapple with the blurry line between business and personal communications on social media.

Taking a step back for the non-litigators and non-lawyers in the room: In litigation, the parties must exchange materials that could have a bearing on the case. This generally covers a pretty broad range of materials and requires each party to produce all such materials that are in its “possession, custody, or control.” A party can also subpoena a non-party to the case for relevant materials in the non-party’s “possession, custody, or control.” However, where possible, it’s generally better to get discovery materials from a party instead of a non-party.

Turning back to the cases against OpenAI, the Authors Guild asked the tech company to produce texts and social media direct messages from more than 30 current and former employees, including some of the company’s top executives. It claims these communications may shed light on the issues in the case.

OpenAI has pushed back strongly. It claims that its employees’ social media accounts and personal phones are, well, personal and, therefore, not in its control. It also contends the Guild’s request might intrude on these persons’ privacy. OpenAI also rejects the Guild’s assumption that OpenAI’s search of its internal materials relevant to the case will be inadequate without its employees’ and former employees’ texts and DMs. It sniffs that the Guild should wait until it receives OpenAI’s documents before presuming as much (how rude!).

The Authors Guild has responded by pointing to OpenAI employees’ posts on X (yes, formerly Twitter) that clearly indicate they used their “personal” social media for work purposes. Same goes for their phones which, while they may not be paid for by the company, seem to have been used to text about business.

So, who’s right here? For starters, it seems pretty likely that, at least for current OpenAI employees, OpenAI could just tell people to turn over DMs and text messages. Assuming the employees don’t object or refuse, this should be enough to establish that OpenAI has “control.” The fact that it seems that OpenAI hasn’t taken this basic step before refusing to produce DMs and text messages seems like a really good way to piss off the Magistrate Judge hearing this issue, especially if the employees violated OpenAI policies requiring work-related communications to take place on devices and accounts owned by the company (it should have such policies if it doesn’t!) or if the communications were clearly within the scope of an employee’s employment. Without that basic showing, it seems likely that the Authors Guild will prevail.

If it does (or if it doesn’t) there will be more about it here!

March 19, 2024

I’ve posted quite a bit about the growing legal battles involving AI companies, copyright infringement, and the right of publicity. These are still early days in the evolution of AI so it’s hard to envision all the ways the technology will develop and be utilized, but I predict AI is going to come up against even more existing intellectual property laws — specifically, trademark law.

For example, in its lawsuit against Open AI and others (which I wrote about here), the New York Times Company alleged the Defendants engaged in trademark dilution. To take a step back, trademark dilution happens when someone uses a “famous” trademark (think Nike, McDonalds, UPS, etc.) without permission, in a way that weakens or otherwise harms the reputation of the mark’s owner. This could happen when an AI platform, in response to a user query, delivers flat-out wrong or offensive content and attributes it to a famous brand such as the New York Times. Thus, according to the Times’ complaint, when asked “what the Times said are ‘the 15 most heart-healthy foods to eat,’” Bing Chat (a Microsoft AI product) responded with, among other things, “red wine (in moderation).” However, the actual Times article on the subject “did not provide a list of heart-healthy foods and did not even mention 12 of the 15 foods identified by Bing Chat (including red wine).” Who knows where Bing got its info from, but if the misinformation and misattribution causes people to think less of the “newspaper of record,” that could be construed as trademark dilution.

There are, however, potential pitfalls for brands who want to use trademark dilution to push back against AI platforms. It’s difficult to discover, expensive to pursue and there can be a lot of ambiguity about whether a brand is “famous” and able to be significantly harmed by trademark dilution. In the New York Times’ case, the media giant has the resources to police the Internet and to file suits; nor should there be any dispute that is a “famous” brand with a reputation that is vitally important. But smaller companies may not have the resources to search for situations where AI platforms incorrectly attribute information, or have a platform visible enough to meaningfully correct the record. Plus, calculating the brand damage from AI “hallucinations” will be very difficult and costly. Also, this area of the law does nothing for brands that aren’t “famous.”

Another area where trademark law and AI seem destined to face off is under the sections of the Lanham Act — the Federal trademark law — that allows celebrities to sue for non-consensual use of their persona in a way that leads to consumer confusion, or others to sue for false advertising that influences consumer purchasing decisions. AI makes it pretty easy to manipulate a celebrity’s (or anyone’s) image or video to do and say whatever a user wants, which opens up all sorts of troublesome trademark possibilities.

Again, there are a couple of serious limitations here. For starters, the false endorsement prong likely only applies to celebrities or others who are well-known and does little to protect the rest of us. Perhaps more important (and terrifying), it seems likely that there will be significant issues in applying the Lanham Act’s provisions on false advertising in the context of deepfakes in political campaigns — like, for example, the recent robocall in advance of the New Hampshire primary that sounded like it was from President Biden. To avoid problems with the First Amendment, the Lanham Act is limited to commercial speech and thus will be largely useless for dealing with this type of AI abuse.

One other potentially interesting (and creepy) area where AI and trademark law might intersect is when it comes to humans making purchasing decisions through an AI interface. For example, a user tells a chatbot to order a case of “ShieldSafe disinfecting wipes,” but what shows up on their porch is a case of “ShieldPro disinfecting wipes” (hat tip to ChatGPT for suggesting these fictional names). While the mistake of a few letters might mean nothing to an algorithm (or even to a consumer who just wants to clean a toilet), it’s certainly going to anger a ShieldSafe Corp. that wants to prevent copycat companies from stealing their customers (and keep their business from going down that aforementioned toilet).

January 16, 2024

I closed out 2023 by writing about one lawsuit over AI and copyright and we’re starting 2024 the same way. In that last post, I focused on some of the issues I expect to come up this year in lawsuits against generative AI companies, as exemplified in a suit filed by the Authors Guild and some prominent novelists against OpenAI (the company behind ChatGPT). Now, the New York Times Company has joined the fray, filing suit late in December against Microsoft and several OpenAI affiliates. It’s a big milestone: The Times Company is the first major U.S. media organization to sue these tech behemoths for copyright infringement.

As always, at the heart of the matter is how AI works: Companies like OpenAI ingest existing text databases, which are often copyrighted, and write algorithms (called large language models, or LLMs) that detect patterns in the material so that they can then imitate it to create new content in response to user prompts.

The Times Company’s complaint, which was filed in the Southern District of New York on December 27, 2023, alleges that by using New York Times content to train its algorithms, the defendants directly infringed on the New York Times’ copyright. It further alleges that the defendants engaged in contributory copyright infringement and that Microsoft engaged in vicarious copyright infringement. (In short, contributory copyright infringement is when a defendant was aware of infringing activity and induced or contributed to that activity; vicarious copyright infringement is when a defendant could have prevented — but didn’t — a direct infringer from acting, and financially benefits from the infringing activity.) Finally, the complaint alleges that the defendants violated the Digital Millennium Copyright Act by removing copyright management information included in the New York Times’ materials, and accuses the defendants of engaging in unfair competition and trademark dilution.

The defendants, as always, are expected to claim they’re protected under “fair use” because their unlicensed use of copyrighted content to train their algorithms is transformative.

What all this means is that while 2023 was the year that generative AI exploded into the public’s consciousness, 2024 (and beyond) will be when we find out what federal courts think of the underlying processes fueling this latest data revolution.

I’ve read the New York Times’ complaint (so you don’t have to) and here are some takeaways:

- The Times Company tried (unsuccessfully) to negotiate with OpenAI and Microsoft (a major investor in OpenAI) but were unable to reach an agreement that would “ensure [The Times] received fair value for the use of its content.” This likely hurts the defendants’ claims of fair use.

- As in the other lawsuits against OpenAI and similar companies, there’s an input problem and an output problem. The input problem comes from the AI companies ingesting huge amounts of copyrighted data from the web. The output problem comes from the algorithms trained on the data spitting out material that is identical (or nearly identical) to what they ingested. In these situations, I think it’s going to be rough going for the AI companies’ fair use claim. However, they have a better fair use argument where the AI models create content “in the style of” something else.

- The Times Company’s case against Microsoft comes, in part, from the fact that Microsoft is alleged to have “created and operated bespoke computing systems to execute the mass copyright infringement . . .” described in the complaint.

- OpenAI allegedly favored “high-quality content, including content from the Times” in training its LLMs.

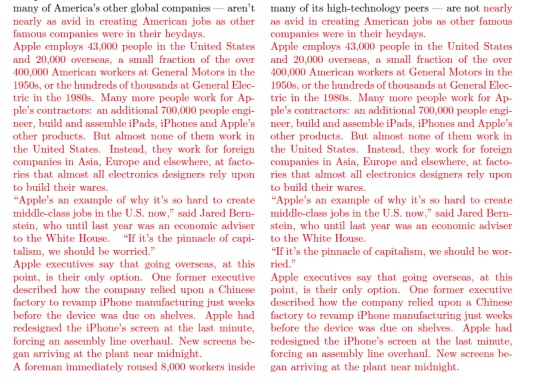

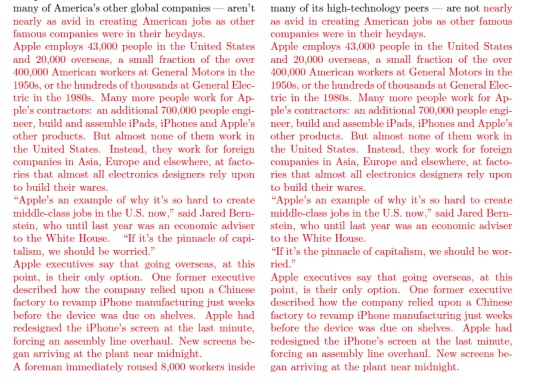

- When prompted, ChatGPT can regurgitate large portions of the Times’ journalism nearly verbatim. Here’s an example taken from the complaint showing the output of ChatGPT on the left in response to “minimal prompting,” and the original piece from the New York Times on the right. (The differences are in black.)

- According to the New York Times this content, easily accessible for free through OpenAI, would normally only be available behind their paywall. The complaint also contains similar examples from Bing Chat (a Microsoft product) that go far beyond what you would get in a normal search using Bing. (In response, OpenAI says that this kind of wholesale reproduction is rare and is prohibited by its terms of service. I presume that OpenAI has since fixed this issue, but that doesn’t absolve OpenAI of liability.)

- Because OpenAI keeps the design and training of its GPT algorithms secret, the confidentiality order here will be intense because of the secrecy around how OpenAI created its LLMs.

- While the New York Times Company can afford to fight this battle, many smaller news organizations lack the resources to do the same. In the complaint, the Times Company warns of the potential harm to society of AI-generated “news,” including its devastating effect on local journalism which, if the past is any indication, will be bad for all of us.

Stay tuned. OpenAI and Microsoft should file their response, which I expect will be a motion to dismiss, in late-February or so. When I get those, I’ll see you back here.

December 19, 2023

This year has brought us some of the early rounds of the fights between creators and AI companies, notably Microsoft, Meta, and OpenAI (the company behind ChatGPT). In addition to the Hollywood strikes, we’ve also seen several lawsuits between copyright owners and companies developing AI products. The claims largely focus on the AI companies’ creation of “large language models” or “LLMs.” (By way of background, LLMs are algorithms that take a large amount of information and use it to detect patterns so that it can create its own “original” content in response to user prompts.)

Among these cases is one filed by the Authors Guild and several prominent writers (including Jonathan Franzen and Jodi Picoult) in the Southern District of New York. It alleges OpenAI ingested large databases of copyrighted materials, including the plaintiffs’ works, to train their algorithms. In early December, the plaintiffs amended their complaint to add Microsoft as a defendant alleging that Microsoft knew about and assisted OpenAI in its infringement of the plaintiffs’ copyrights.

Because it is the end of the year, here are five “things to look for in 2024” in this case (and others like it):

- What will defendants argue on fair use and how will the Supreme Court’s 2023 decision in Goldsmith impact this argument? (In 2023 the SCOTUS ruled that Andy Warhol’s manipulation of a photograph by Lynn Goldsmith was not transformative enough to qualify as fair use.)

- Does the fact that the output of platforms like ChatGPT isn’t copyrightable have any impact on the fair use analysis? The whole idea behind fair use is to encourage subsequent creators to build on the work of earlier creators, but what happens to this analysis when the later “creator” is merely a computer doing what it was programmed to do?

- Will the fact that OpenAI recently inked a deal with Axel Springer (publisher of Politico and Business Insider) to allow OpenAI to summarize its news articles as well as use its content as training data for OpenAI’s large language models affect OpenAI’s fair use argument?

- What impact, if any, will this and other similar cases have on the business model for AI? Big companies and venture capital firms have invested heavily in AI, but if courts rule they must pay authors and other creators for their copyrighted works it dramatically changes the profitability of this model. Naturally, tech companies are putting forth numerous arguments against payment, including how little each individual creator would get considering how large the total pool of creators is, how it would curb innovation, etc. (One I find compelling is the idea that training a machine on copyrighted text is no different from a human reading a bunch of books and then using the knowledge and sense of style gained to go out and write one of their own.)

- Is Microsoft, which sells (copyrighted) software, ok with a competitor training its platform on copyrighted materials? I’m guessing that’s probably not ok.

These are all big questions with a lot at stake. For good and for ill, we live in exciting times, and in the arena of copyright and IP law I guarantee that 2024 will be an exciting year. See you then!